Introduction

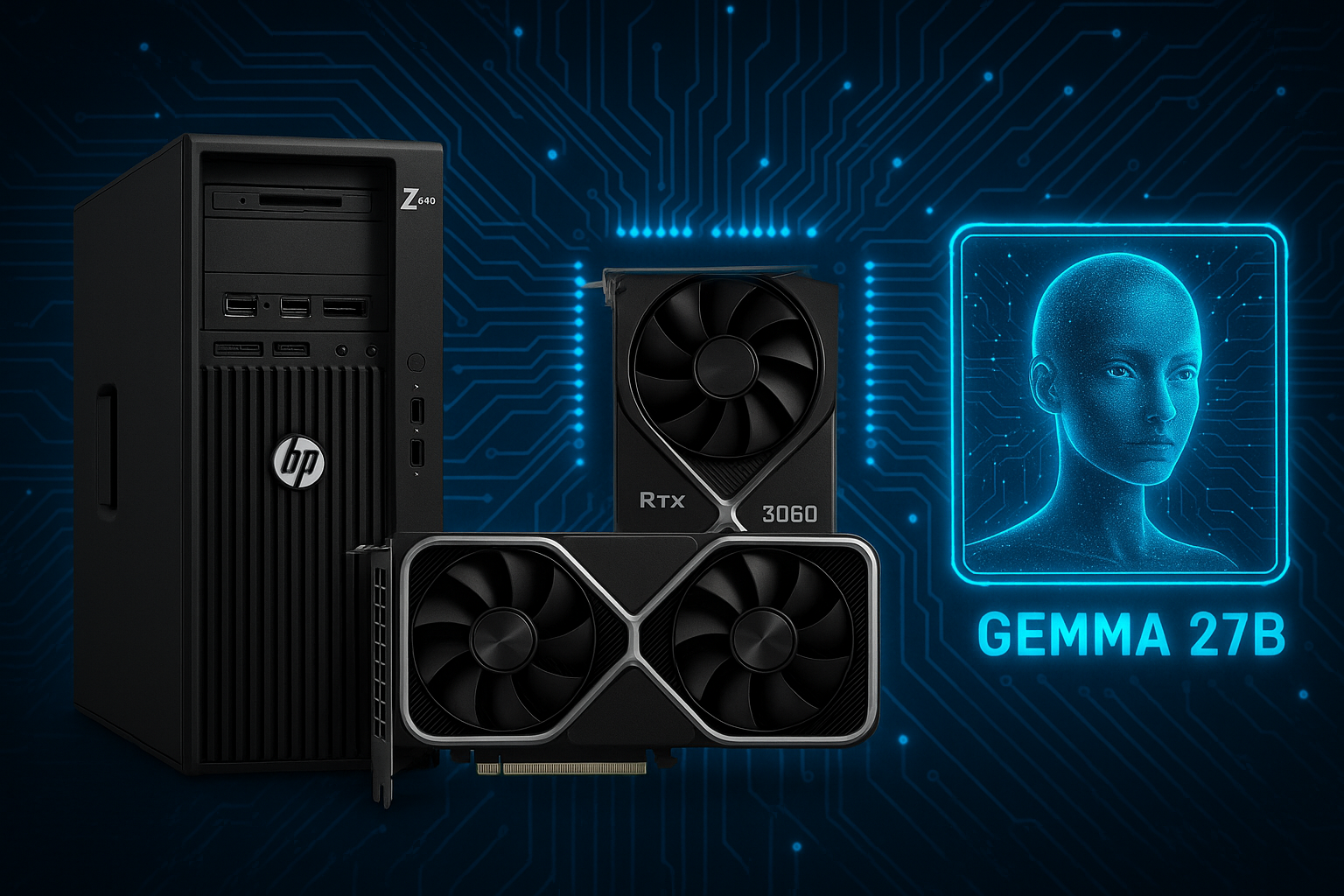

The HP Z440 workstation has emerged as an exceptional foundation for budget-conscious AI server builds, offering enterprise-grade reliability at affordable prices. When paired with dual NVIDIA RTX 3060 12GB GPUs, this setup provides 24GB of total VRAM—sufficient for running sophisticated AI models like Google's Gemma 27B locally while maintaining complete data privacy and control[^1][^2].

This comprehensive guide will walk you through transforming an HP Z440 into a powerful AI inference server capable of running large language models, handling machine learning workloads, and supporting various AI applications without relying on cloud services.

Hardware Overview: HP Z440 Workstation

System Specifications

The HP Z440 workstation, manufactured between 2015-2018, represents HP's professional-grade computing platform designed for demanding workloads[^3][^4]. Key specifications include:

Processor Support:

- Intel Xeon E5-1600 v3/v4 series (4-8 cores)

- Intel Xeon E5-2600 v3/v4 series (up to 22 cores)

- Single socket configuration with C612 chipset[^5]

Memory:

- 8 DIMM slots supporting up to 512GB DDR4 ECC

- Memory speeds: 2133MHz (v3) to 2400MHz (v4)[^4]

Expansion:

- 2x PCIe Gen3 x16 slots (perfect for dual GPUs)

- 1x PCIe Gen3 x8, 1x PCIe Gen2 x4, 1x PCIe Gen2 x1

- 1x PCI slot[^3]

Power Supply:

- 525W standard or 700W high-end option

- The 700W PSU includes two 6-pin GPU power cables rated at 18A each (216W per cable)[^6][^7]

Storage:

- 2 internal 3.5" bays

- 2 external 5.25" bays

- 6x SATA 6Gb/s ports

- No native NVMe support (requires PCIe adapter)[^3]

GPU Configuration: Dual RTX 3060 12GB

Why RTX 3060 12GB?

The RTX 3060 12GB stands out as the optimal choice for budget AI builds due to several key advantages[^8][^9]:

VRAM Advantage:

- 12GB GDDR6 memory per card (24GB total with dual setup)

- More VRAM than the faster RTX 3080 (10GB) or RTX 3060 Ti (8GB)

- Essential for loading large language models like Gemma 27B[^10]

AI Performance:

- 3,584 CUDA cores with Ampere architecture

- 2nd generation RT cores and 3rd generation Tensor cores

- Dedicated AI acceleration capabilities[^11]

Power Efficiency:

- 170W TDP per card (340W total)

- Well within the Z440's 700W PSU capacity with 225W GPU allocation[^6]

Power Considerations

The HP Z440's 700W PSU can support dual RTX 3060s with proper power management[^6]:

- GPU Power Budget: 225W total (per HP specifications)

- Actual Requirement: 340W for dual RTX 3060s

- Solution: HP's 6-pin connectors are rated at 18A@12V = 216W each, exceeding standard ATX specifications[^7][^12]

Power Adapter Requirements: Each RTX 3060 requires an 8-pin power connector, but the Z440 provides 6-pin connectors. Quality 6-pin to 8-pin adapters are necessary[^13][^14]:

- Use reputable brands with proper wire gauge (18AWG minimum)

- Avoid cheap adapters that may cause overheating or fire hazards[^15][^12]

Understanding Gemma 27B

Model Overview

Google's Gemma 27B represents a significant advancement in open-source large language models[^16][^17]:

Key Specifications:

- 27 billion parameters

- 8K token context window (Gemma 3: 128K tokens)

- Trained on 13-14 trillion tokens

- Multilingual support (140+ languages)

- Commercial use permitted[^18][^19]

Hardware Requirements

VRAM Requirements:

- Full Precision (BF16): ~54GB VRAM

- 8-bit Quantization: ~29GB VRAM

- 4-bit Quantization (Q4_K_M): ~15-17GB VRAM[^16][^20][^21]

Optimal Configuration: For the dual RTX 3060 setup (24GB total VRAM), Gemma 27B will run efficiently using 4-bit quantization, providing excellent performance while maintaining model quality[^20][^22].

Software Stack Setup

Base Operating System: Proxmox VE

Proxmox Virtual Environment provides an ideal foundation for AI server deployment[^23][^24]:

Benefits:

- Type-1 hypervisor for maximum performance

- GPU passthrough capabilities

- Easy backup and restoration

- Web-based management interface

- LXC container support for efficient resource utilization

Installation Process:

- Create Proxmox installation media

- Enable IOMMU and VT-d in BIOS

- Configure GRUB for GPU passthrough

- Install Proxmox VE 8.x

GPU Passthrough Configuration

Configure GPU passthrough to enable direct hardware access[^23][^25]:

# Edit GRUB configuration

nano /etc/default/grub

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt pcie_acs_override=downstream,multifunction nofb nomodeset video=vesafb:off,efifb:off"

# Update GRUB

update-grub

# Configure VFIO modules

echo "vfio" >> /etc/modules

echo "vfio_iommu_type1" >> /etc/modules

echo "vfio_pci" >> /etc/modules

echo "vfio_virqfd" >> /etc/modules

# Blacklist GPU drivers

echo "blacklist nouveau" >> /etc/modprobe.d/blacklist.conf

echo "blacklist nvidia" >> /etc/modprobe.d/blacklist.conf

# Reboot and verify

reboot

lspci -v | grep -E "(VGA|3D)"

Container Setup: LXC for AI Workloads

Create an LXC container for optimal performance[^2]:

Container Configuration:

- Ubuntu 22.04 LTS base image

- Privileged container for GPU access

- Sufficient RAM allocation (8-16GB)

- GPU device passthrough

# Create LXC container

pct create 100 ubuntu-22.04-standard_22.04-1_amd64.tar.xz \

--memory 16384 \

--cores 8 \

--rootfs local-lvm:32 \

--net0 name=eth0,bridge=vmbr0,ip=dhcp \

--features nesting=1 \

--unprivileged 0

# Add GPU devices

pct set 100 -dev0 /dev/nvidia0

pct set 100 -dev1 /dev/nvidia1

pct set 100 -dev2 /dev/nvidiactl

pct set 100 -dev3 /dev/nvidia-uvm

AI Software Installation

Ollama: Local LLM Management

Ollama provides the foundation for running language models locally[^26][^27]:

Installation:

# Install Ollama

curl -fsSL https://ollama.com/install.sh | sh

# Verify installation

ollama --version

# Pull Gemma 27B model

ollama pull gemma2:27b

Configuration:

# Set environment variables for network access

export OLLAMA_HOST=0.0.0.0:11434

export OLLAMA_ORIGINS=*

# Start Ollama service

systemctl enable ollama

systemctl start ollama

OpenWebUI: Web Interface

OpenWebUI provides a ChatGPT-like interface for local AI models[^28][^29]:

Docker Installation:

# Install Docker

curl -fsSL https://get.docker.com -o get-docker.sh

sudo sh get-docker.sh

# Run OpenWebUI

docker run -d --name open-webui \

-p 3000:8080 \

-e OLLAMA_BASE_URL=http://localhost:11434 \

-v open-webui:/app/backend/data \

--restart unless-stopped \

ghcr.io/open-webui/open-webui:main

Features:

- Multi-model support

- Chat history and management

- User authentication and roles

- RAG (Retrieval Augmented Generation) capabilities

- API compatibility

NVIDIA Driver Installation

Install appropriate NVIDIA drivers for RTX 3060 support:

# Add NVIDIA repository

wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2204/x86_64/cuda-keyring_1.0-1_all.deb

sudo dpkg -i cuda-keyring_1.0-1_all.deb

sudo apt update

# Install CUDA toolkit and drivers

sudo apt install cuda-toolkit-12-2

sudo apt install nvidia-driver-535

# Verify installation

nvidia-smi

Performance Optimization

Memory Management

Optimize system memory for AI workloads:

Swap Configuration:

# Disable swap for better performance

sudo swapoff -a

sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

# Configure memory overcommit

echo 'vm.overcommit_memory = 1' >> /etc/sysctl.conf

VRAM Optimization:

- Enable GPU memory pooling for efficient utilization

- Configure dynamic VRAM allocation based on model requirements

- Monitor memory usage with

nvidia-smiandnvtop

Model Quantization

Optimize Gemma 27B for dual RTX 3060 setup:

Quantization Options:

- Q4_K_M: ~15GB VRAM, good balance of quality and performance

- Q4_K_S: ~14GB VRAM, slightly reduced quality for better performance

- Q6_K: ~20GB VRAM, higher quality if VRAM permits[^30]

Performance Expectations:

- Inference Speed: 15-25 tokens/second with dual RTX 3060s

- Context Length: 8K tokens (Gemma 2) or 128K tokens (Gemma 3)

- Concurrent Users: 2-5 simultaneous chat sessions

Network Configuration

Configure network access for remote usage:

# Configure firewall

sudo ufw allow 3000/tcp # OpenWebUI

sudo ufw allow 11434/tcp # Ollama API

# Set up reverse proxy (optional)

sudo apt install nginx

# Configure nginx for SSL and domain mapping

Monitoring and Maintenance

System Monitoring

Implement comprehensive monitoring:

GPU Monitoring:

# Install monitoring tools

sudo apt install nvtop htop

# Monitor GPU utilization

watch -n 1 nvidia-smi

# Temperature monitoring

nvidia-smi -q -d TEMPERATURE

Performance Metrics:

- GPU utilization and memory usage

- CPU load and temperature

- System memory consumption

- Network throughput

- Model inference latency

Backup and Recovery

Implement robust backup strategies:

Container Backups:

# Create LXC snapshots

pct snapshot 100 "pre-update-$(date +%Y%m%d)"

# Backup container

vzdump 100 --compress gzip --storage local

Configuration Backups:

- Ollama model directory

- OpenWebUI user data and settings

- System configurations and scripts

Advanced Configuration

Multi-Model Support

Configure the system to run multiple AI models:

Model Management:

# Pull additional models

ollama pull codellama:13b

ollama pull mistral:7b

ollama pull llava:13b # Multimodal model

# List available models

ollama list

API Integration

Enable API access for external applications:

Ollama API:

- REST API at

http://localhost:11434/api - Compatible with OpenAI API format

- Support for streaming responses

Usage Examples:

import requests

response = requests.post('http://localhost:11434/api/generate',

json={'model': 'gemma2:27b', 'prompt': 'Explain quantum computing'})

Custom Model Fine-tuning

Prepare the system for model customization:

Requirements:

- Additional storage for training data

- Extended memory for fine-tuning processes

- Backup GPU compute for training workloads

Troubleshooting Common Issues

GPU Recognition Problems

Symptoms: GPUs not detected by Ollama Solutions:

- Verify NVIDIA driver installation

- Check GPU passthrough configuration

- Restart Ollama service

- Validate container GPU access

Memory Issues

Symptoms: Out of memory errors during model loading Solutions:

- Use smaller quantized models

- Adjust context window size

- Monitor VRAM usage with

nvidia-smi - Implement model swapping for multi-model setups

Performance Bottlenecks

Symptoms: Slow inference speeds Solutions:

- Verify GPU utilization

- Check CPU bottlenecks

- Optimize quantization settings

- Monitor system temperature and throttling

Cost Analysis

Hardware Investment

HP Z440 Workstation: $400-600 (used market) Dual RTX 3060 12GB: $500-700 ($250-350 each) Memory Upgrade: $100-200 (32-64GB DDR4 ECC) Storage (NVMe): $100-150 (1TB M.2 + PCIe adapter) Power Adapters: $20-30 Total: $1,120-1,680

Operating Costs

Power Consumption: ~450W under load Monthly Electricity: $25-40 (depending on rates) Maintenance: Minimal (enterprise-grade hardware)

Cost Comparison

Cloud Alternative: GPT-4 API usage

- $10-30+ per million tokens

- Limited privacy and control

- Ongoing subscription costs

Local AI Server Benefits:

- One-time hardware investment

- Complete data privacy

- Unlimited usage

- Custom model deployment

- 24/7 availability

Future Upgrades and Scalability

Memory Expansion

The Z440 supports up to 512GB DDR4 ECC memory, enabling:

- Larger model deployment

- Multiple simultaneous models

- Extended context windows

- Enhanced performance

Storage Upgrades

Options:

- Multiple NVMe drives via PCIe adapters

- High-capacity SATA SSDs for model storage

- Network-attached storage for backups

GPU Upgrades

Considerations:

- RTX 4060 Ti 16GB for more VRAM

- Single RTX 4090 24GB for maximum performance

- Professional cards (RTX A6000) for compute workloads

Conclusion

The HP Z440 workstation with dual RTX 3060 12GB GPUs represents an exceptional foundation for building a powerful, cost-effective AI server capable of running sophisticated models like Gemma 27B. This configuration provides 24GB of total VRAM, enterprise-grade reliability, and excellent price-to-performance ratio for local AI deployment.

By following this comprehensive guide, you'll have a fully functional AI server that offers complete data privacy, unlimited usage, and the flexibility to experiment with various AI models and applications. The combination of proven enterprise hardware, modern AI software stack, and proper optimization delivers professional-grade AI capabilities at a fraction of commercial solutions' cost.

Whether you're a researcher, developer, or enthusiast, this build provides the perfect platform for exploring the frontiers of artificial intelligence while maintaining complete control over your data and computational resources. The investment in local AI infrastructure pays dividends through unlimited usage, privacy protection, and the ability to adapt and scale as your needs evolve.

No comments:

Post a Comment