Introduction

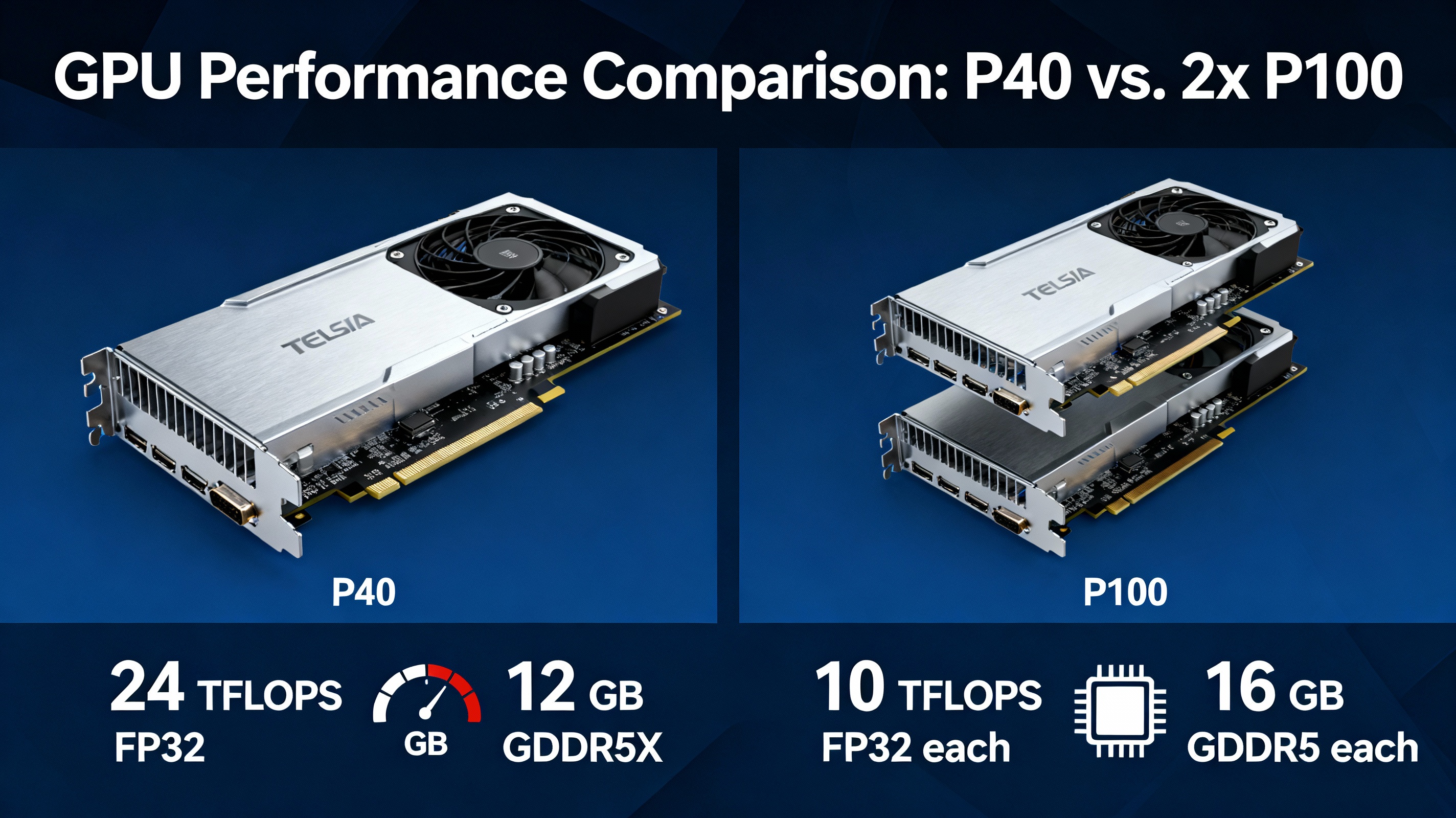

The comparison between one NVIDIA Tesla P40 and two Tesla P100 GPUs is relevant for professionals deciding between high-capacity memory acceleration (P40) and raw computational throughput (P100), especially in deep learning, scientific computing, and large-scale visualization workloads. Below is a detailed, expert comparison based on architecture, specifications, and practical considerations.

Architecture and Specification Overview

| Feature | Tesla P40 (x1) | Tesla P100 (x2, PCIe 16GB) |

|---|---|---|

| Architecture | Pascal (GP102) | Pascal (GP100) |

| Release Date | Sep 2016 | Jun 2016 |

| CUDA Cores | 3840 | 3584 per GPU (7168 total) |

| Base/Boost Clock | 1303/1531 MHz | 1190/1329 MHz (each) |

| VRAM | 24 GB GDDR5X | 16 GB HBM2 per GPU (32 GB total) |

| Memory Bandwidth | 694.3 GB/s | 732.2 GB/s per GPU (1464.4 GB/s total) |

| FP32 Performance | 11.76 TFLOPS | 9.53 TFLOPS per GPU (19.06 TFLOPS total) |

| FP16 (Half) | 368 GFLOPS (1:32) | 19.05 TFLOPS (2:1, per GPU) |

| FP64 (Double) | 368 GFLOPS (1:32) | 4.76 TFLOPS (1:2, per GPU) |

| Power (TDP) | 250W | 250W per GPU (500W total) |

| Interface | PCIe 3.0 x16 | PCIe 3.0 x16 (each) |

| Tensor Cores | None (Pascal only) | None (Pascal only) |

| Outputs | No display outputs | No display outputs |

All specifications are per card unless noted otherwise[1][2][3].

Key Differences

Memory Capacity and Type

- P40: Offers 24 GB of GDDR5X memory per card, making it attractive for workloads requiring large memory pools, such as large batch inference or models that don’t fit into smaller VRAM[1][3].

- P100: Each card has 16 GB of faster HBM2 memory, but two cards provide a combined 32 GB, which is superior in total capacity. HBM2 also delivers higher bandwidth per card (732.2 GB/s vs. 694.3 GB/s), and cumulatively, two P100s far exceed the P40 in memory bandwidth[1].

Computational Performance

- P40: Delivers 11.76 TFLOPS of FP32 performance per card. Its strength is in higher clock speeds and more CUDA cores, but these are not enough to match two P100s together.

- P100: Each provides 9.53 TFLOPS FP32, but two cards can deliver almost double the performance (19.06 TFLOPS total). Additionally, the P100’s HBM2 memory and superior FP64/FP16 ratios make it a better fit for scientific and mixed-precision workloads[1][2][3].

Power and Thermal Considerations

- P40: Single card, 250W TDP.

- P100: Two cards, 500W TDP total, requiring a robust power supply and cooling solution. For dense deployments, power and heat may become limiting factors.

Cost and Availability Both cards were launched at similar MSRPs, but as of 2025, they are end-of-life and primarily available on the secondary market. Actual pricing will depend on supply and demand, but acquiring two P100s will generally cost more than one P40, though the performance-per-dollar may favor two P100s for compute-bound tasks[1][2].

Performance in Real-World Workloads

Deep Learning Training

- FP32/FP16 Performance: Two P100s collectively outpace a single P40 for most compute-heavy tasks, especially in training large neural networks, due to their combined FP32/FP16 throughput and memory bandwidth[1][3].

- Large Model Support: The 24 GB of the P40 may allow larger batch sizes or models that don’t fit into a single P100’s 16 GB, but with two P100s, model parallelism or data parallelism can achieve even larger effective model sizes.

Inference and Batch Processing

- Memory-Limited Workloads: The P40’s 24 GB is beneficial for inference tasks with very large models or data batches.

- Throughput-Limited Workloads: Two P100s offer higher total throughput, making them better for scenarios where batch processing speed is critical.

Scientific Computing

- FP64 Performance: The P100 is vastly superior for double-precision workloads, offering 4.76 TFLOPS per card, while the P40 is limited to 368 GFLOPS per card (1:32 ratio)[1].

- Memory Bandwidth: The P100’s HBM2 memory and higher aggregate bandwidth with two cards make it the clear choice for bandwidth-sensitive scientific applications.

Practical Considerations

- Scalability: Two P100s require proper PCIe lane allocation and may face physical space and cooling constraints in some systems.

- Software Support: Multi-GPU setups need frameworks and applications that support data/model parallelism. Not all software scales efficiently to two GPUs.

- No Display Outputs: Both cards are compute-focused and lack display outputs, making them unsuitable for graphics workloads.

Summary Table

| Category | Tesla P40 (x1) | Tesla P100 (x2) |

|---|---|---|

| VRAM | 24 GB GDDR5X | 32 GB HBM2 |

| Memory Bandwidth | 694.3 GB/s | 1464.4 GB/s |

| FP32 Performance | 11.76 TFLOPS | 19.06 TFLOPS |

| FP64 Performance | 368 GFLOPS | 9.52 TFLOPS |

| Best For | Large-memory inference | Compute-heavy training, HPC |

| Power Consumption | 250W | 500W |

| Price (Secondary) | Lower | Higher |

Conclusion

- Choose the Tesla P40 if your primary constraint is per-card memory capacity for large-model inference or specific memory-bound workloads, and cost/space/power are limitations[3].

- Choose two Tesla P100s if you need maximum compute throughput, memory bandwidth, and double-precision performance for scientific computing or large-scale deep learning training[1][3].

- General Rule: For most high-performance computing and deep learning training scenarios, two P100s will outperform a single P40. For very large single-GPU memory requirements, the P40 is unique, but this is increasingly rare as frameworks and hardware evolve.

In summary: Two Tesla P100s deliver roughly double the computational power and memory capacity of a single P40, at the cost of higher power and space requirements. The P40 shines only when its larger per-card memory is absolutely necessary and scaling to two GPUs is not feasible.

No comments:

Post a Comment